Large Language Models are exceptionally good at producing fluent text.

They are not inherently good at knowing when not to answer.

In regulated or compliance-sensitive environments, this distinction is critical.

A linguistically plausible answer that is not grounded in official documentation is often worse than no answer at all.

This article describes a practical architecture for handling language detection, translation intent, and multilingual retrieval in an enterprise AI system — with a strong emphasis on determinism, evidence-first behavior, and hallucination prevention.

The examples are intentionally domain-neutral, but the patterns apply to legal, regulatory, financial, and policy-driven systems.

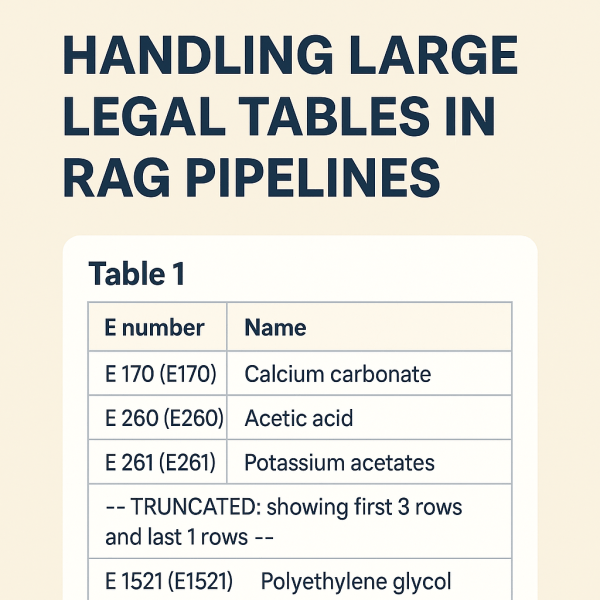

The Core Problem

Consider these seemingly simple user questions:

"What is E104?"

"Slovenski prevod za E104?"

"Hrvatski prevod za E104?"

"Kaj je E104 v slovaščini?"

"Slovenski prevod za Curcumin?"At first glance, these look like:

- definitions

- translations

- or simple multilingual queries

A naïve LLM-only approach will happily generate answers for all of them.

But in a regulated environment, each of these questions carries a different risk profile:

- Some require retrieval

- Some require translation

- Some require terminology resolution

- Some should result in a deterministic refusal

The challenge is not generating text —

it is deciding which answers are allowed to exist.

Key Design Principle: Evidence Before Language

The system described here follows one non-negotiable rule:

Language is applied after evidence is proven, never before.

This means:

- Language preference never expands the answer space

- Translation never invents facts

- Missing official wording is explicitly acknowledged

Continue reading “Designing Language-Safe AI Systems: Deterministic Guardrails for Multilingual Enterprise AI”