When working with legal or regulatory documents (such as EU legislation), one of the deceptively hard problems is correctly modeling hierarchical lists. These documents are full of nested structures like:

- numbered paragraphs (

1.,2.), - lettered items (

(a),(b)), - roman numerals (

(i),(ii),(iii)),

often mixed with free-text paragraphs, definitions, and exceptions.

At first glance, this looks simple. In practice, it’s one of the main sources of downstream errors in search, retrieval, and AI-assisted answering.

The Core Problem

HTML representations of legal texts (e.g. EUR-Lex) are structurally inconsistent:

- nesting depth is not reliable,

- list items are often rendered using generic

<div>grids, - numbering may reset visually without resetting the DOM hierarchy,

- multiple paragraphs can belong to the same logical list item.

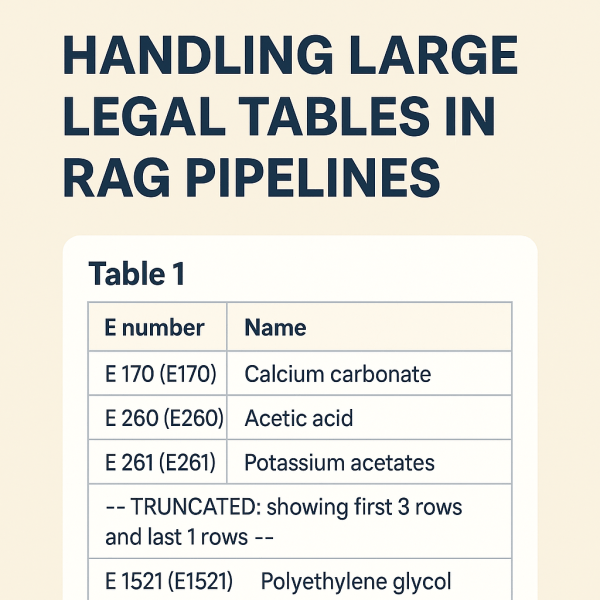

If you naïvely chunk text or rely on DOM depth alone, you end up with:

- definitions split across chunks,

- list items grouped incorrectly,

- or worst of all: unrelated provisions merged together.

Once this happens, downstream agents or LLMs are forced to guess structure — which leads to hallucinations, missing conditions, or incorrect legal interpretations.

The Design Goal

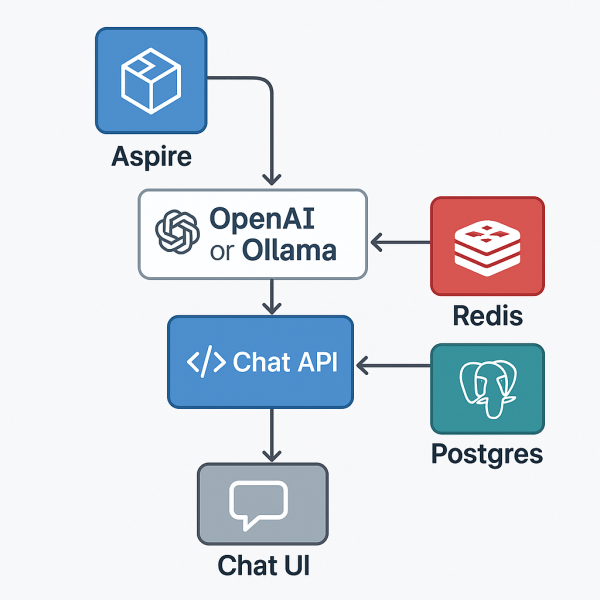

The goal was not to “understand” the document using an LLM.

The goal was to:

- encode the document’s logical structure deterministically at index time, so that:

- list hierarchy is explicit,

- grouping is stable,

- and retrieval can be purely mechanical.

In other words: make the data correct so the AI doesn’t have to be clever.

Continue reading “Solving Hierarchical List Parsing in Legal Documents (Without LLM Guessing)”